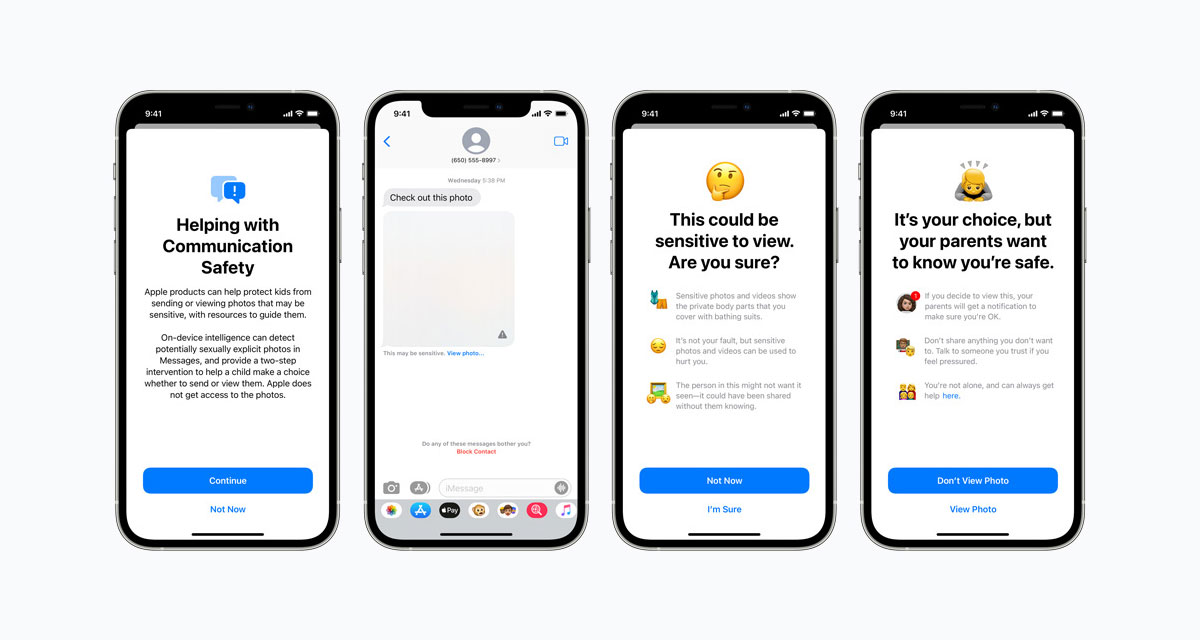

Apple’s new CSAM detection plans are supposed to help it weed out child exploitation images that are uploaded to iCloud Photos, but privacy advocates around the world have already spoken out against the steps Apple will take. Now, a new report says that Apple’s own employees have misgivings, too.

Apple’s CSAM methods will involve it checking all photos uploaded to iCloud, comparing a neural hash of those images with known CSAM content. The first part of that check will be carried out on iPhones and iPads, and it’s that which has people concerned.

A new Reuters report says that Apple employees have taken to Slack to voice their concerns.

Apple employees have flooded an Apple internal Slack channel with more than 800 messages on the plan announced a week ago, workers who asked not to be identified told Reuters. Many expressed worries that the feature could be exploited by repressive governments looking to find other material for censorship or arrests, according to workers who saw the days-long thread.

Past security changes at Apple have also prompted concern among employees, but the volume and duration of the new debate is surprising, the workers said. Some posters worried that Apple is damaging its leading reputation for protecting privacy.

Much of the concern revolves around whether a government could force Apple to check for other content, not just CSAM. Apple says that isn’t something that could happen, but that hasn’t been enough to satiate skeptics so far.

The fact Apple is now going to have to convince its own people, as well as the public, is something the company could do without as the iPhone 13 announcement draws ever closer.

You may also like to check out:

- Download: Windows 11 Build 22000.100 ISO Beta Update Released

- How To Install Windows 11 On A Mac Using Boot Camp Today

- iOS 15 Beta Compatibility For iPhone, iPad, iPod touch Devices

- 150+ iOS 15 Hidden Features For iPhone And iPad [List]

- Download iOS 15 Beta 5 IPSW Links And Install On iPhone And iPad

- iOS 15 Beta 5 Profile File Download Without Developer Account, Here’s How

- How To Downgrade iOS 15 Beta To iOS 14.6 / 14.7 [Tutorial]

- How To Install macOS 12 Monterey Hackintosh On PC [Guide]

- iOS 15 Beta 5 Download Expected Release Date

- Download: iOS 14.7.1 IPSW Links, OTA Profile File Along With iPadOS 14.7.1 Out Now

- Jailbreak iOS 14.7.1 Using Checkra1n, Here’s How-To [Guide]

- How To Downgrade iOS 14.7.1 And iPadOS 14.7.1 [Guide]

- Convert Factory Wired Apple CarPlay To Wireless Apple CarPlay In Your Car Easily, Here’s How

- Apple Watch ECG App Hack: Enable Outside US In Unsupported Country On Series 5 & 4 Without Jailbreak

You can follow us on Twitter, or Instagram, and even like our Facebook page to keep yourself updated on all the latest from Microsoft, Google, Apple, and the Web.