Apple has announced that it is making changes that will add additional child safety measures to iPhones, iPads, and Macs later this year. It’ll only be available in the United States at launch but will be expanded internationally over time.

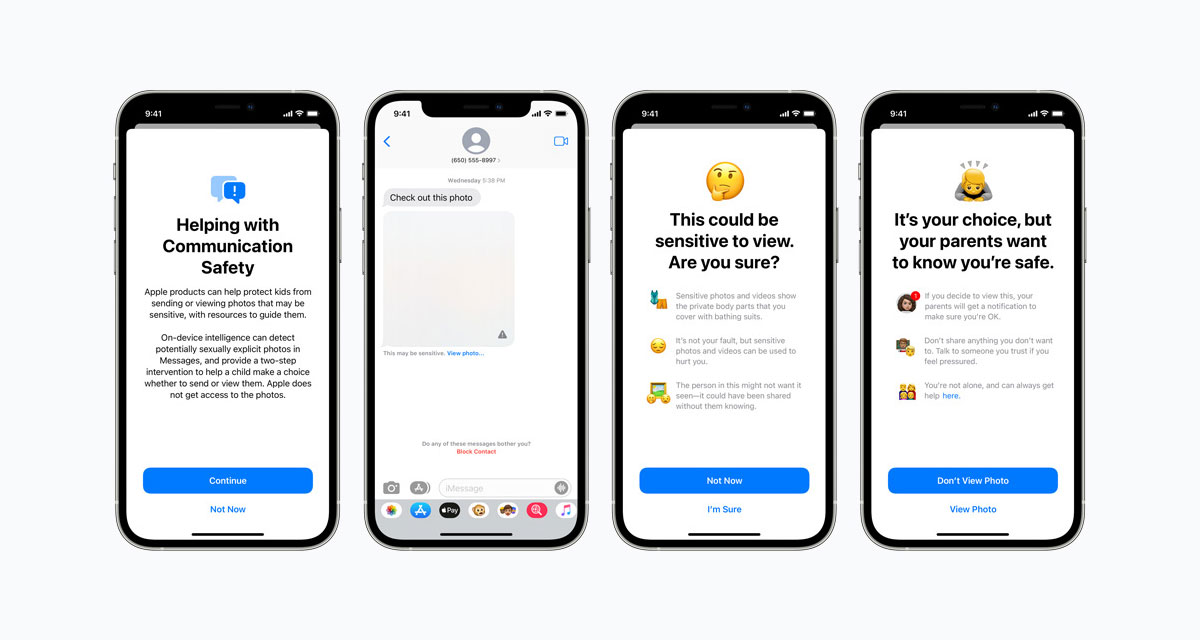

The first change will be a new feature in the Messages app that will be called Communication Safety.

Apple says that it will warn kids and their parents when receiving or sending sexually explicit photos. If an image is determined to be explicit, it will automatically be blurred and a warning triggered.

When a child attempts to view an image that has been flagged by Communication Safety, they’ll receive a message that tells them they are about to view images that could be hurtful. Parents can also opt to receive an alert at this time, too.

The new Communication Safety feature will be part of the upcoming iOS 15, iPadOS 15, and macOS Monterey updates all of which are expected to arrive in or around September.

Apple will also use on-device detection to tell whether someone has an image in their Photos app that has been flagged as containing explicit child content. Known Child Sexual Abuse Material (CSAM) images will be detected before it is uploaded to iCloud Photos, with Apple checking image hashes against a list of known hashes on-device. Each time this happens, a marker is placed against the account. After an unknown threshold is breached — a certain number of images have been found, essentially — Apple will manually review the images and report the account to the authorities.

Apple says this method is better than others that handle image checking on a company’s servers, mainly because it offers increased privacy. Notably, any device with iCloud Photos disabled won’t have this checking applied.

• This system is an effective way to identify known CSAM stored in iCloud Photos accounts while protecting user privacy.

• As part of the process, users also can’t learn anything about the set of known CSAM images that is used for matching. This protects the contents of the database from malicious use.

• The system is very accurate, with an extremely low error rate of less than one in one trillion account per year.

• The system is significantly more privacy-preserving than cloud-based scanning, as it only reports users who have a collection of known CSAM stored in iCloud Photos.

In addition to this, Siri is also getting smarter about CSAM and can be asked how to report content and find help for children who need it.

You may also like to check out:

- Download: Windows 11 Build 22000.100 ISO Beta Update Released

- How To Install Windows 11 On A Mac Using Boot Camp Today

- iOS 15 Beta Compatibility For iPhone, iPad, iPod touch Devices

- 150+ iOS 15 Hidden Features For iPhone And iPad [List]

- Download iOS 15 Beta 4 IPSW Links And Install On iPhone And iPad

- iOS 15 Beta 4 Profile File Download Without Developer Account, Here’s How

- How To Downgrade iOS 15 Beta To iOS 14.6 / 14.7 [Tutorial]

- How To Install macOS 12 Monterey Hackintosh On PC [Guide]

- iOS 15 Beta 5 Download Expected Release Date

- Download: iOS 14.7.1 IPSW Links, OTA Profile File Along With iPadOS 14.7.1 Out Now

- Jailbreak iOS 14.7.1 Using Checkra1n, Here’s How-To [Guide]

- How To Downgrade iOS 14.7.1 And iPadOS 14.7.1 [Guide]

- Convert Factory Wired Apple CarPlay To Wireless Apple CarPlay In Your Car Easily, Here’s How

- Apple Watch ECG App Hack: Enable Outside US In Unsupported Country On Series 5 & 4 Without Jailbreak

You can follow us on Twitter, or Instagram, and even like our Facebook page to keep yourself updated on all the latest from Microsoft, Google, Apple, and the Web.