Apple’s annual WWDC event is ongoing right now and while it’s online-only for the first time, that isn’t stopping there from being some big announcements.

We saw iOS 14, iPadOS 14, watchOS 7, and macOS 11 Big Sur announced on Monday and now we’ve also heard about a new framework that will allow iPhones and Macs to track hand and body movements.

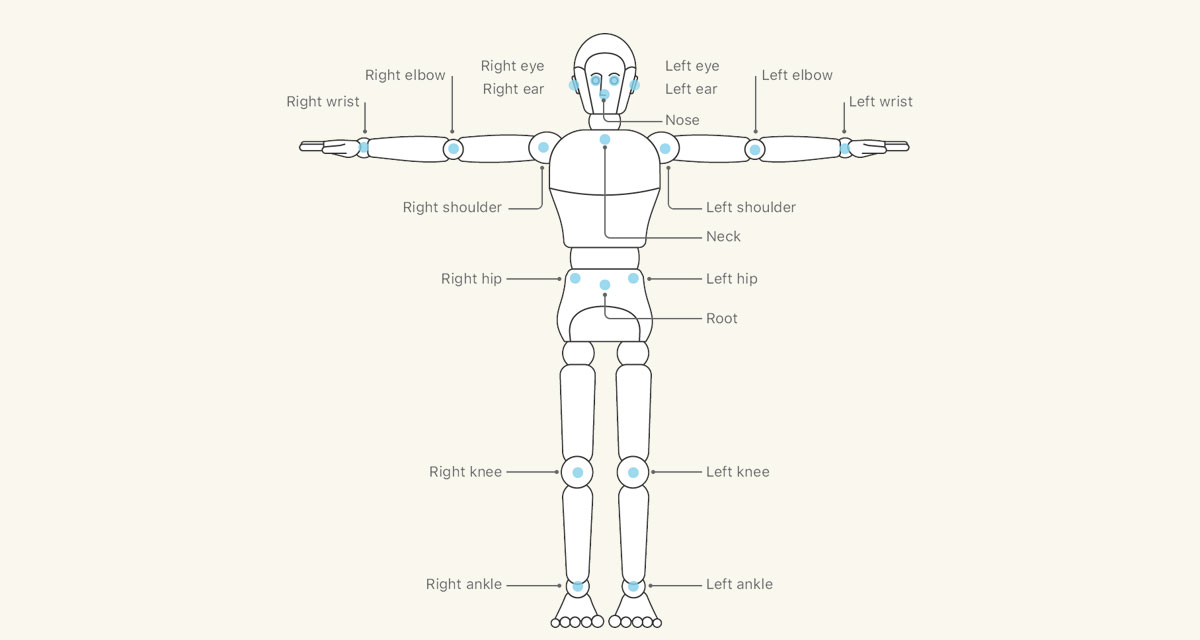

Available as a WWDC session online for developers to check out, details show that the new framework will allow apps to track movement and poses and then carry out actions based on what they see.

Explore how the Vision framework can help your app detect body and hand poses in photos and video. With pose detection, your app can analyze the poses, movements, and gestures of people to offer new video editing possibilities, or to perform action classification when paired with an action classifier built in Create ML.

Apple provided a few examples of how the new framework could be used. It suggested that an app could watch hand movements and then overlay a hand emoji depending on the pose being struck. Other ideas include a fitness app that will track exercises and even an app that could allow people to write in the air and have an iPhone turn the movement into text.

While none of this means anything until developers make use of it, the potential for what this new framework could make possible is huge. Hopefully there are plenty of apps already in the heads of those developers right now.

You may also like to check out:

- Download iOS 14 Beta 1 IPSW Links And Install On iPhone 11, Pro, XS Max, X, XR, 8, 7, Plus, 6s, iPad, iPod [Tutorial]

- iOS 14 Beta 1 Download IPSW Links, OTA Profile And iPadOS 14 Beta 1 For iPhone And iPad

- iOS 14 Beta 1 Profile File Download Without Dev Account, Here’s How

- iOS 14 Hidden Features On iPhone And iPad That You Don’t Know About [List]

- Download: iOS 13.6 Beta 2 IPSW Links, OTA Profile File, Beta 2 Of iPadOS 13.6 Released

- iOS 13.5.1 Downgrade No Longer Possible After Apple Stops Signing iOS 13.5 Jailbreak Firmware

- Jailbreak iOS 13.5.1 Using Checkra1n, Here’s How [Video Tutorial]

- Jailbreak iOS 13.5 On iPhone 11, Pro Max, SE, iPad Pro, More Using Unc0ver 5.0.0 [Tutorial]

- Download: iOS 13.5.1 IPSW Links, OTA Update Released For iPhone And iPad

- Jailbreak iOS 13.5 Without Or No Computer, Here’s How

- Apple Watch ECG App Hack: Enable Outside US In Unsupported Country On Series 5 & 4 Without Jailbreak

You can follow us on Twitter, or Instagram, and even like our Facebook page to keep yourself updated on all the latest from Microsoft, Google, Apple, and the Web.