It has been a while since KGI Securities analyst Ming-Chi Kuo popped his head up above the parapet with some Apple-based information, but, thankfully, the highly accurate analyst is back.

This time around Kuo has served a research note to investors which outlines the inner workings, manufacturing processes, and underlying technology which powers Apple’s new “Face ID” system on the upcoming iPhone X.

Speculation has been rife over the last six months about the possibility of Apple pulling Touch ID out of its new iPhone and replacing it with a facial detection system. One of the drivers behind such a drastic change is the fact that the Cupertino-based company wanted to remove the Home button on the device to free up precious space for the stunning edge-to-edge display that we expect to arrive on that hardware.

Removing the Home button would also remove the Touch ID sensor, and instead of finding an innovative way of keeping this in place, such as embedding it under the display, Apple looks to have chosen to go with its new Face ID system, as referenced by the iOS 11 GM firmware leak.

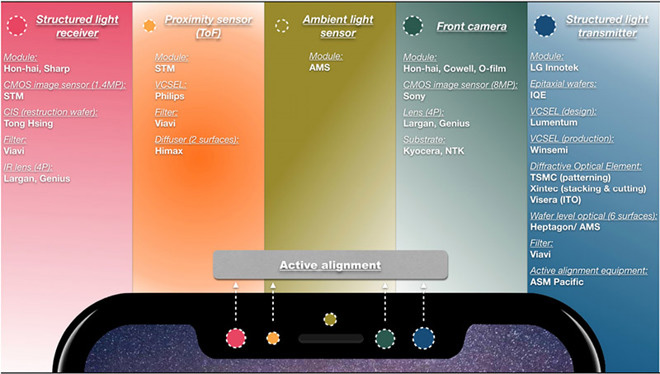

And now thanks to Ming-Chi Kuo, we have additional details about the system in general. The research note suggests that Apple’s new system relies on four main components being in place at the top notch area; a structured light transmitter, structured light receiver, the front camera of the device, and a time of flight/proximity sensor.

It seems that the structured light models are utilized by the device and firmware to capture depth information before being integrated with data from the front-facing camera. That data is then fused with advanced software calculations to form a 3D image of what it believes it is seeing.

…the structured light modules are likely vertical-cavity surface-emitting laser (VCSEL) arrays operating in the infrared spectrum. These units are used to collect depth information which, according to Kuo, is integrated with two-dimensional image data from the front-facing camera. Using software algorithms, the data is combined to build a composite 3D image

It’s also believed that the proximity sensor will be used by this system to provide instantaneous alerts to the user based on distance from the device, due to distant constraints which are in place through the chosen system. It’s definitely an interesting set of information which provides a little more clarity into the type of experience that users will get should they choose to upgrade to iPhone X when it launches.

(Via: AppleInsider)

You may also like to check out:

- SolarMovie Kodi Addon Fix In 2017: How To Install It The Right Way

- Download iOS 11 GM Firmware Leak Wallpapers For iPhone And iPad

- Download Kodi 17.5 RC1 Android APK, iOS IPA, And For Windows, Mac Now

- iPhone X / Edition Vs iPhone 7 Vs 7 Plus Vs 6s Vs 2G, More [Screen-To-Body Ratio And Size Comparison]

- Download iOS 11 Beta 10 & Install On iPhone 7, 7 Plus, 6s, 6, SE, 5s, iPad, iPod [Tutorial]

- Jailbreak iOS 10.3.3 / 10.3.2 / 10.3.1 / 10.3 For iPhone And iPad [Latest Status Update]

You can follow us on Twitter, add us to your circle on Google+ or like our Facebook page to keep yourself updated on all the latest from Microsoft, Google, Apple and the Web.