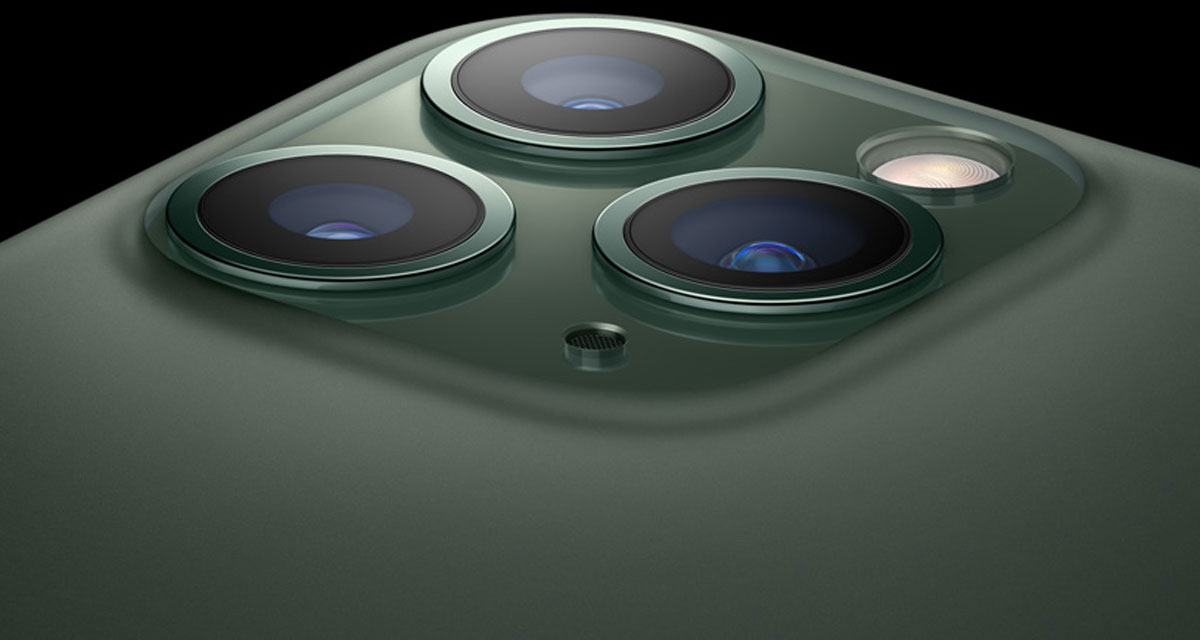

Here’s how iOS 13.2 beta Deep Fusion camera feature will work on iPhone 11, iPhone 11 Pro and iPhone 11 Pro Max.

Apple announced Deep Fusion when it announced the iPhone 11 and iPhone 11 Pro, and while the feature is still not available to everyone, the latest Apple developer beta does have the feature included. That, hopefully, means that it won’t be too long before we get our hands on it.

Deep Fusion is Apple’s fancy new image processing setup that is supposed to give images a better fidelity than was previously available, especially on an iPhone. When it was announced, Apple showed off some test images of people wearing sweaters. The reason seemed to be because you could see the individual thread and whatnot, something that isn’t normally possible. The images looked good, but we’ll need to see how things work out when we get our hands on it.

Deep Fusion will work differently depending on which camera you are using and what the conditions are.

- Using the standard wide angle lens will see Smart HDR used in most scenes. When the light isn’t great, Night Mode will kick in. We’ve already seen how great that is. Deep Fusion will also be used in this scenario, too.

- Using the telephoto lens will mean Deep Fusion is used most of the time. Smart HDR will only be used when the scene is superbly bright. Night Mode will work when it’s dark.

- The ultra wide lens will always use Smart HDR because it support neither Night Mode nor Deep Fusion. So that’s worth bearing in mind.

Like all of Apple’s image processing, there’s a lot going on under the hood. Instead of taking one photo, Deep Fusion takes multiple shots and then fuses them – see what we did there? – into a single image. The Verge has a great rundown of how this works, but basically:

- The camera is already taking stills even before you press the shutter button. Those stills are taken at a fast shutter speed, with three additional shots and one longer exposure one added when you take your photo.

- The last four shots are all merged to create a new image. Deep Fusion then picks the short exposure image that it thinks is best and then merges it with the image it just created. Everything is then processed for noise reduction.

- After that there are various other processing dark arts that go on depending on the subject of the photo.

- The final image is then put together and saved to the Photos app. The user doesn’t see any of that happen thanks to Apple’s lightning fast A13 CPU.

It’s worth noting that the processing required here means you can’t use Deep Fusion during burst mode, which is a shame. It really only comes to the fore when you’ve a shot that is staged.

As we mentioned, we don’t yet have the software in hand to test ourselves but The Verge gets into detail about how Apple’s magic happens here. Be sure to check that out, too.

(Source: The Verge)

You may also like to check out:

- Download: iOS 13.1.2 / iPadOS 13.1.2 IPSW Links, OTA Update Released For iPhone And iPad

- Downgrade iOS 13.1.2 / iPadOS 13.1.2 To iOS 13.1.1 / 13.1 / 13.0 / 12.1.4, Here’s How

- Best iPhone 11, 11 Pro Max Case With Slim, Wallet, Ultra-Thin Design? Here Are Our Top Picks [List]

- iPhone 11 / 11 Pro Max Screen Protector With Tempered Glass: Here Are The Best Ones

- Best iPhone 11, Pro Max Tripod For Night Mode Long Exposure Photos [List]

- Install WhatsApp Web On iPad Thanks To iOS 13 And iPadOS 13.1

- Jailbreak iOS 13 / 13.1 Status Update [Continuously Updated With Latest Info]

- iOS 13.1 Jailbreak Update: Security Content Fixes And Patches Detailed By Apple

- Best iPhone 11, Pro Max Tripods For Night Mode Long Exposure Photos [List]

- Download Kodi 19 IPA / DEB For tvOS 13 Apple TV

You can follow us on Twitter, or Instagram, and even like our Facebook page to keep yourself updated on all the latest from Microsoft, Google, Apple, and the Web.