Apple has announced some important new accessibility features that are coming soon to iPhone, iPad, and Apple Watch. They include a new AssistiveTouch feature for the Apple Watch that allows users to control the wearable without touching the display.

The new Assistive Touch feature is designed for people with limited mobility and it’s very much something out of the realm of sci-fi.

To support users with limited mobility, Apple is introducing a revolutionary new accessibility feature for Apple Watch. AssistiveTouch for watchOS allows users with upper body limb differences to enjoy the benefits of Apple Watch without ever having to touch the display or controls.

Using built-in motion sensors like the gyroscope and accelerometer, along with the optical heart rate sensor and on-device machine learning, Apple Watch can detect subtle differences in muscle movement and tendon activity, which lets users navigate a cursor on the display through a series of hand gestures, like a pinch or a clench. AssistiveTouch on Apple Watch enables customers who have limb differences to more easily answer incoming calls, control an onscreen motion pointer, and access Notification Center, Control Center, and more.

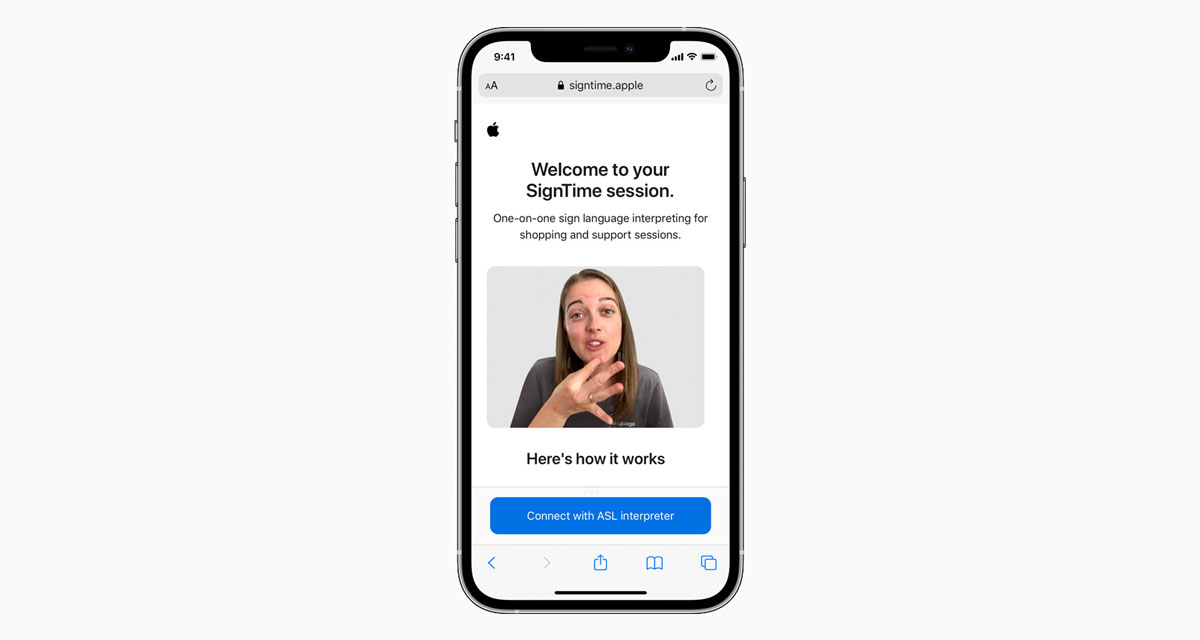

The accessibility features don’t stop there, with iPadOS also getting eye-tracking features that will allow users to control their table using eye motion.

iPadOS will support third-party eye-tracking devices, making it possible for people to control iPad using just their eyes. Later this year, compatible MFi devices will track where a person is looking onscreen and the pointer will move to follow the person’s gaze, while extended eye contact performs an action, like a tap.

Apple is also improving VoiceOver across the board, giving it the ability to describe images as well as describing a person’s position within an image for the first time.

Apple is introducing new features for VoiceOver, an industry‑leading screen reader for blind and low vision communities. Building on recent updates that brought Image Descriptions to VoiceOver, users can now explore even more details about the people, text, table data, and other objects within images. Users can navigate a photo of a receipt like a table: by row and column, complete with table headers. VoiceOver can also describe a person’s position along with other objects within images — so people can relive memories in detail, and with Markup, users can add their own image descriptions to personalize family photos.

There’s a lot more going on as well, with Apple sharing all of the details via a new Newsroom post. All of these new accessibility features will come later this year, presumably as part of new software updates that will be announced during next month’s WWDC event.

You may also like to check out:

- Download: iOS 14.5.1 IPSW Links, OTA Profile File Along With iPadOS 14.5.1 Released

- Jailbreak iOS 14.5.1 Using Checkra1n, Here’s How-To [Guide]

- How To Downgrade iOS 14.5.1 And iPadOS 14.5.1 [Tutorial]

- How To Fix Bad iOS 14 Battery Life Drain [Guide]

- Convert Factory Wired Apple CarPlay To Wireless Apple CarPlay In Your Car Easily, Here’s How

- iPhone 12 / Pro Screen Protector With Tempered Glass: Here Are The Best Ones

- Best iPhone 12, 12 Pro Case With Slim, Wallet, Ultra-Thin Design? Here Are Our Top Picks [List]

- Best iPhone 12 Mini Screen Protector: Here’s A List Worth Checkin

- Best iPhone 12 Pro Max Screen Protector: Here Is A List Worth Checking

- Apple Watch ECG App Hack: Enable Outside US In Unsupported Country On Series 5 & 4 Without Jailbreak

You can follow us on Twitter, or Instagram, and even like our Facebook page to keep yourself updated on all the latest from Microsoft, Google, Apple, and the Web.