Google Glass, despite being shrouded in mystery, is one of the most exciting-looking gizmos around. Various video clips have surfaced which showcase its abilities, with the Project Glass team flirting with every possibility in a bid to turn their sci-fi-esque gadget into a real-life, marketable product.

Hitherto, we haven’t been offered much information with regards to how everything works, and nobody outside the development team has had a chance to try them out, but a patent application made by Google offers us something of an insight as to the frameworks of its secretive Project Glass.

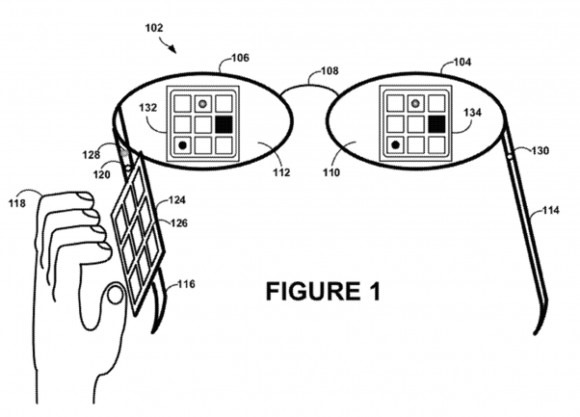

The controls are portrayed as side-mounted touchpad (or physical pad) which is then reflected in the wearer’s line of sight, allowing a user to make a selection without looking directly at the 3×3 grid, but through the glasses. The touchpad could track proximity of fingers as well as just touch, and with further development of the technology, could offer the user a virtual representation of their own hand, tricking the brain into believing the touchpad is in the field of view. This particular idea is shown here:

Of course, Project Glass isn’t solely reliant on the idea of the touchpad, with speech, camera input, and wireless keyboard among other ways of helping users interact with their Glasses.

Meanwhile, Google Glass might also include its very own "self-awareness" of the environment, automatically reacting to surroundings without the need for user intervention. The patent application notes:

A person’s name may be detected in speech during a wearer’s conversation with a friend, and, if available, the contact information for this person may be displayed in the multimode input field

Such a feature could be extremely handy when trying to organize social events, business deals, or other situations in which the contact information of another is useful to have. Google goes further, stating it could detect in-car ambience, automatically firing up a navigation app under the assumption a user is making some kind of trip.

The artificial intelligence of Project Glass seems endless, and as SlashGear points out, the proximity sensor could detect a wearer has gloves on in the cold weather, switching readily to a more suitable method of input. With a smartphone, there’s no real way of using a touchscreen (besides a stylus, special gloves, or a tongue(!)), but with Google Glasses, the possibilities are seemingly endless.

You can follow us on Twitter, add us to your circle on Google+ or like our Facebook page to keep yourself updated on all the latest from Microsoft, Google, Apple and the Web.